Introduction

Technological advancements have transformed dental and maxillofacial workflows, catalysing a paradigm shift toward digitised diagnostic, treatment planning, and postoperative follow-up. Central to this evolution is cone-beam computed tomography (CBCT), a cornerstone imaging modality that overcomes the inherent limitations of conventional two-dimensional radiography by delivering high-resolution, three-dimensional anatomical reconstructions [1,2]. Its capacity to delineate complex craniofacial structures with precision has rendered CBCT indispensable for preoperative planning, particularly in identifying critical regions such as the mandibular canal (MC) [3,4].

Located within the MC, the inferior alveolar nerve (IAN), a component of the mandibular neurovascular bundle, provides sensory innervation to the mandibular dentition, lower lip, chin, and associated soft tissues while also facilitating motor function in masticatory muscles [5]. Precise localisation of the IAN and its spatial orientation relative to adjacent anatomical landmarks is imperative for mitigating iatrogenic injury during procedures such as dental implant placement and third molar extraction [6,7]. Despite advancements, IAN injury persists as a clinically significant complication, with reported incidence rates ranging from 0.4% to 13.4% [8,9]. Such injuries may manifest as transient hypoesthesia or irreversible paraesthesia, profoundly impairing patients’ functional and psychosocial well-being [10]. Consequently, meticulous preoperative mapping of the MC, inclusive of anatomical variations, is critical to minimising procedural risk. Nevertheless, achieving consistent and accurate segmentation of the MC, a surrogate for IAN localisation, remains a persistent challenge in clinical practice.

Current segmentation methodologies in medical imaging span manual, semi-automated, and automated approaches. Manual segmentation, traditionally regarded as the gold standard, necessitates expert-driven, slice-by-slice annotation of the MC on CBCT scan [11]. While this technique achieves high anatomical fidelity, it is labour intensive, time prohibitive, and susceptible to interand intra-operator variability [12]. Semi-automated algorithms integrated into commercial CBCT software partially alleviate these constraints by allowing users to demarcate discrete points along the MC trajectory, which are interpolated to generate a fixed-diameter cylindrical model [13]. Although this approach reduces segmentation time, it does not eliminate potential operator bias introduced during manual input.

The advent of artificial intelligence (AI), particularly convolutional neural networks (CNNs) and deep learning (DL), has significantly advanced medical image analysis [14,15]. By emulating human cognitive processes through pattern recognition in annotated datasets, AI-driven systems enable rapid, reproducible segmentation of intricate anatomical structures.

Emerging studies highlight the efficacy of AI in segmenting various oral and maxillofacial structures on CBCT images, demonstrating performance metrics comparable to expert manual tracings [16]. In the context of MC segmentation, published research has reported AI-based algorithms achieving accuracy rates of up to 99% [17,18]. However, these studies exhibit several limitations, including inconsistencies in the use and reporting of standardised reference methods [16]. Additionally, while the relationship between the third molar and the MC has been explored with promising results using AI [19], no studies have specifically evaluated the influence of third molar presence (erupted or impacted) or absence on the accuracy of AI-driven segmentation.

This study aims to address these gaps by systematically evaluating the precision and quality of AI-powered MC segmentation in comparison to semi-automated radiologist tracings using a standardised methodology. This segmentation, serving as the ground truth reference, involved manually annotating sequential points along the MC trajectory on cross-sectional CBCT views. Building on calibrated parameters from prior research and addressing methodological limitations identified in our pilot investigations, we seek to assess the accuracy of the AI system in this context. Furthermore, we aim to analyse the impact of third molar status on segmentation accuracy. Given the increasing clinical adoption of AI-based tools, rigorous performance evaluation is essential to validate their reliability and applicability in clinical practice.

Material and methods

Image dataset

A total of 150 anonymised CBCT scans from 150 patients (70 males, 80 females) aged 18 to 71 years were retrospectively retrieved from the database of Poznan University of Medical Sciences, Poland. These scans, originally acquired between 2020 and 2023 for preoperative planning of dental implant placement and third molar extractions, were selected based on the following inclusion criteria: patient age must be equal to or above 18 years, the field of view (FOV) must fully encapsulate the mandible to ensure comprehensive visualisation of the MC and absence of artifacts or pathological anomalies impairing osseous or neurovascular architecture. Scans with insufficient FOV, artifacts, or structural pathologies compromising mandibular integrity were excluded.

All imaging was performed using a CRANEX 3D dental imaging system (Soredex, Milwaukee, USA), with datasets archived in Digital Imaging and Communications in Medicine (DICOM) format. Acquisition parameters adhered to a standardised protocol across all scans: X-ray tube voltage 90 kV, tube current 10 mA, isotropic voxel resolution 0.25 mm, and FOV dimensions ranging from 600 mm × 800 mm to 1600 mm × 1300 mm. These settings were optimised to balance radiation dose minimisation with diagnostic fidelity, ensuring high-contrast resolution for MC segmentation.

Segmentation protocol

The semi-automated segmentation method, widely used and clinically accepted, was chosen as the reference method to assess AI performance. Semi-automated segmentation of the MC was performed using Romexis software (v. 6.2; Planmeca, Helsinki, Finland). Specific points along the canal pathway were manually placed by the investigator on cross-sectional slices. The software then interpolated these markers to generate a continuous cylindrical pathway (diameter: 1.50 mm).

Segmentations were conducted by an experienced oral and maxillofacial radiologist (with more than 10 years of clinical practice) and a trainee in oral and maxillofacial radiology. To ensure consistency, the operators were calibrated and used the following settings: slice thickness 0.25 mm, sharpness set to zero, contrast set to 1200, and brightness value fixed to 1808. These parameters were chosen based on a study by Issa et al. [20], which identified them as optimal for MC visualisation on CBCT images using Romexis software (v. 6.2; Planmeca, Helsinki, Finland). To assess reproducibility, the segmentation process for all scans was repeated on 2 separate occasions with a 10-day interval.

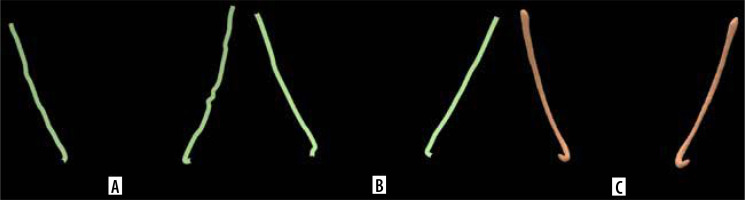

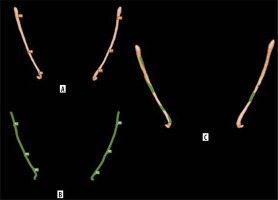

All segmentations were performed on an NEC MultiSync EA245WMi-2 display screen (Sharp NEC Display Solutions, Tokyo, Japan) under optimal ambient lighting conditions. Bilateral MCs from each scan were then exported as a unified stereolithography (STL) file for subsequent analysis (Figure 1).

Figure 1

Mandibular canal segmentation. A) Semi-automatic segmentation performed by the first investigator. B) Semi-automatic segmentation performed by the second investigator. C) Automatic segmentation performed by Diagnocat

For the automated segmentation, the same CBCT datasets were processed using Diagnocat (DGNCT LLC, Miami, USA), a cloud-based AI platform utilising a U-Net-like CNN architecture. The CBCT images were uploaded to the software, and the automatic segmentation function was activated to segment the entire scanned region. The segmented bilateral MCs in each image, generated automatically, were then exported as an STL file for analysis (Figure 1).

Evaluation of 3D models

Cloud Compare v.2.13.alpha (open-source software, http://www.cloudcompare.org/) was used to perform the overlap and visualisation of the segmented MCs generated by both the investigators and the AI-based software for the same CBCT image. This process aimed to assess the segmentation accuracy of the AI in comparison to the semi-automatic segmentations performed by the investigators. The evaluation focused on quantifying volumetric deviations to determine the level of agreement between the segmentation methods.

As part of this analysis, each investigator’s segmented MC (STL file) was individually overlapped with the AI-generated segmentation from the corresponding scan. The computed distances between the models were then averaged to obtain a global average measure of segmentation deviation. The process began with a pre-registration step using the 3-points method, in which 3 anatomically relevant landmarks, the mandibular foramen, molar region, and premolar region, were manually placed on both 3D models to ensure proper spatial alignment (Figure 2).

Figure 2

Alignment of mandibular canal segmentation (STL file). A) Automatic segmentation performed by Diagnocat with 3 points for alignment. B) Semi-automatic segmentation performed by the second investigator with 3 points for alignments. C) Aligned and overlapped structures

Following this alignment, the “compute cloud/mesh distance” function in Cloud Compare was applied to calculate the spatial deviations between the overlapping 3D models. This function provided numerical outputs, including the average distance and maximum distances between the 2 surfaces. The software’s default overlap parameter was set to 100, ensuring a full 3D alignment for precise deviation analysis.

Statistical analysis

Statistical analyses were conducted using SPSS 29.0 (SPSS Inc., Chicago, IL, USA). The inter-rater and intra-rater reliability of the semi-automatic segmentation of the MCs performed by both investigators was assessed using a two-way mixed model intraclass correlation coefficient (ICC) for absolute agreement, evaluating agreement both between investigators and within each investigator across the 2 evaluation sessions.

To compare the MC segmentation methods, volumetric deviations were assessed by calculating the overall average distance and standard deviation, which provided a quantitative measure of segmentation accuracy for each image. The computed distance between each operator and the AI was recorded, and the average distance for each scan was then calculated.

Average distance per scan

For each scan n, the average distance was determined by taking the mean of the distances obtained from both operator-AI comparisons:

D1n is the computed mean distance between the first investigator and AI for scan n,

D2n is the computed mean distance between the second investigator and AI for scan n.

Descriptive statistics were applied to the numerical results of the 3D evaluation, with data summarised as average ± standard deviation.

The normality of numerical variables was assessed using the Shapiro-Wilk test (p < 0.05).

To compare segmentation deviations across third molar status categories (absent, erupted, impacted), the Kruskal-Wallis test was applied. Post hoc pairwise comparisons were conducted using the Mann-Whitney U test with Bonferroni correction to adjust for multiple comparisons.

Results

Regarding third molar status, 60 scans on the left side showed the absence of a third molar, 34 had an erupted third molar (with the crown positioned above the alveolar ridge), and 56 presented with an impacted third molar (with the crown partially or fully located below the alveolar ridge). On the right side, 70 scans showed no third molar, 25 had an erupted third molar, and 55 had an impacted third molar. Each scan contributed bilateral MC segmentations, resulting in a total of 300 MCs for evaluation. Inter- and intra-rater reliability assessments were conducted to quantify the consistency of semi-automated MC segmentation (Table 1). Inter-rater reliability, calculated as the concordance between 2 independent operators, demonstrated substantial agreement, averaging 84.5%. Given the close inter-rater values between day 0 and day 10, the results of the repeated semi-automated segmentation on day 10 were considered for evaluation. Intra-rater reliability, reflecting the reproducibility of segmentations by the same operator across repeated trials, achieved near-perfect agreement, averaging 95.5%. This underscores the precision and stability of the semi-automated protocol under controlled conditions.

Table 1

Inter- and intra-rater reliability analysis

| Category | ICC (%) | |

|---|---|---|

| Inter-rater reliability | Day 0 | 84 |

| Day 10 | 85 | |

| Intra-rater reliability | OMFR | 94 |

| Trainee in OMFR | 97 |

Three-dimensional spatial deviations between AI-based and semi-automated MC segmentations were quantified using surface-to-surface distance metrics. The Shapiro-Wilk test confirmed a non-normal distribution (p < 0.05), and deviations were summarised using the median. The overall median deviation was 0.29 mm, with a standard deviation ranging from 0.25 to 0.37 mm. The per-scan average distance varied from 0.19 mm to 4.72 mm (Supplementary material).

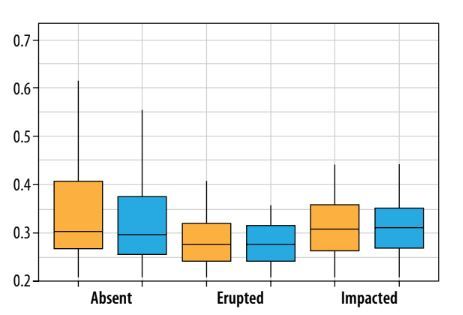

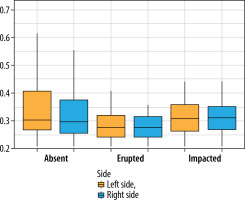

The box plot analysis (Figure 3) reveals distinct trends in segmentation accuracy across third molar categories. The absent category has the lowest deviation (0.27 mm), while the erupted category shows a minor increase (0.28 mm). The impacted category exhibits the highest deviations (0.32 mm). In terms of laterality, deviations are slightly higher on the left side than on the right.

Figure 3

Box plot showing the average distance between experts and AI, stratified by third molar status (absent, erupted, impacted) and location (left side, right side). The central horizontal line represents the median, the box denotes the interquartile range (IQR), and the whiskers extend to 1.5 times the IQR

Discussion

The aim of this study was to evaluate the accuracy and variability of a commercial AI-based tool (Diagnocat) for MC segmentation, with a particular focus on factors influencing segmentation precision, including the status of third molars. Building upon the pilot study [21], this investigation provides further insights into AI-driven segmentation accuracy by incorporating a more diverse cohort of patients and optimising CBCT image viewer settings [20]. While previous studies have explored AI performance in mandibular MC segmentation [17,18], the current study offers a detailed assessment of anatomical factors, such as the presence of impacted third molars, that may affect AI accuracy. Notably, we did not use DentalComs [22] for this study. Instead, our focus was on evaluating the overall volumetric conformity of overlapping structures by calculating the average distance. This metric, which quantifies the deviation between corresponding points on AI-generated and semi-automated segmentations, is particularly useful for assessing complex, non-regular anatomical structures like the MC [23-25].

Our results revealed a median deviation of 0.29 mm between AI and semi-automated expert segmentations, indicating strong overall agreement. This performance surpasses the 0.555 mm and 0.39 mm mean curve distance (MCD) reported by Jaskari et al. [26] and Järnstedt et al. [27], respectively. Unlike MCD, which quantifies contour-level discrepancies, our average distance metric evaluates deviations across the entire 3D surface, offering a more holistic assessment of volumetric alignment critical for complex structures like the MC. The observed variability of the standard deviation ranging from 0.25 to 0.37 may reflect anatomical diversity in our cohort, a factor underrepresented in prior studies. This underscores the importance of training AI models on large heterogeneous datasets to improve robustness in clinically challenging scenarios.

Clinically, deviations ≤ 0.50 mm are considered acceptable for dental implant planning [28], and in our study, 88% of AI segmentations met this threshold, demonstrating strong potential for integration into preoperative workflows. However, the presence of outliers highlights the necessity of expert evaluation, particularly in anatomically complex regions, to improve segmentation accuracy and compensate for errors caused by under-segmentation and partial volume effects. Notably, scans 87 and 95 exhibited significant deviations, with average distances of 1.93 mm ± 3.47 and 4.72 mm ± 3.87, respectively.

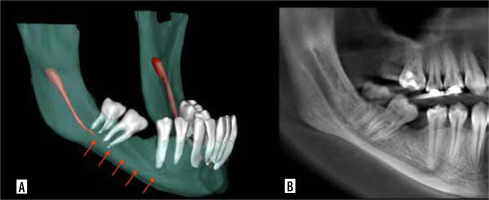

In scan 87 (Figure 4), AI-based segmentation of the MC was disrupted, probably due to the partial volume effect, which occurs when a voxel contains a mixture of different tissue types, leading to blurred boundaries and reduced contrast [29]. This effect may have impaired the ability of Diagnocat to distinguish the canal from surrounding bone, particularly in areas where the canal is narrow or obscured by anatomical complexity near the impacted third molar. Additionally, other factors, such as beam hardening artifacts or limited training data for complex cases, may have further contributed to the segmentation failure. Conversely, under-segmentation occurs when critical anatomical details are omitted from the AI-based segmentation [30]. A notable example is seen in scan 95 (Figure 5), where Diagnocat failed to segment the portion of the MC extending from the molar root region to the mental foramen. This limitation may stem from insufficient anatomical diversity in the AI’s training dataset, reducing its accuracy when encountering unfamiliar or complex anatomical patterns. In such cases, manual intervention by clinicians remains essential to ensure accurate segmentation and prevent potential surgical complications.

Figure 4

Mandibular canal of scan 87 with partial volume effect. A) AI-based segmentation. B) Sagittal view on CBCT image

An important finding in our study was the significant impact of third molar status on AI segmentation accuracy. The box plot analysis demonstrated that AI segmentation was most accurate when the third molar was absent (median: 0.27 mm) or erupted (median: 0.28 mm), whereas the accuracy declined in cases with impacted third molars (median: 0.32 mm). This reduction in accuracy can be attributed to the segmentation approach used by Diagnocat, which, like many AI-based tools, relies on a straight-line pattern based on the radiolucent trajectory of the MC [19]. While effective when the canal is clearly distinguishable, impacted third molars introduce additional radiodense structures that obscure the canal’s path, leading to segmentation errors. This limitation is particularly critical in clinical settings where the roots of impacted third molars overlap with the mandibular canal, because AI performs worst in these cases, precisely where accurate segmentation is most crucial for surgical planning. To address this limitation, AI models should be trained on more diverse datasets that include a wide range of third-molar impaction scenarios.

While there is limited literature directly assessing the influence of third molar status on MC segmentation accuracy, several studies have evaluated the capability of AI in detecting third molars and their relationship with the MC. For instance, Orhan et al. [31] assessed the diagnostic performance of Diagnocat for third molar evaluation on CBCT, reporting high accuracy. Additionally, while multiple studies demonstrate AI’s promising ability to assess third molar and MC spatial relationships on panoramic radiographs [32-34], Kazimierczak et al. [35] highlighted its limitations in evaluating root apex and MC proximity on computed tomography images, reporting low diagnostic accuracy. These findings suggest that while AI performs well in detecting third molars, its ability to accurately segment the MC in cases of impaction remains an area for further investigation.

Despite the strengths of our study, including a standardised methodology, large sample size (n = 150), and robust comparison to semi-automated expert segmentations, there are notable limitations. The retrospective design and single-centre dataset may limit the generalisability of our results. Additionally, the proprietary nature of Diagnocat restricts transparency regarding the decision-making process. Open-source AI models could offer better insight into segmentation mechanisms, enabling improvements in algorithmic performance. Furthermore, our dataset excluded cases with artifacts and pathologies, which may lead to an overestimation of AI performance in real-world scenarios. Future research should focus on incorporating multi-centre cohorts, expanding datasets to include more anatomically diverse cases (particularly those with impacted third molars), and integrating open-source AI models and advanced image-processing techniques to enhance segmentation accuracy, generalisability, and reliability in complex anatomical regions.

Conclusions

AI-driven segmentation demonstrated high accuracy with low median deviation (0.29 mm), with 88% of cases within clinically acceptable limits. However, segmentation errors increased in cases with impacted third molars, emphasising the need for diverse AI training datasets. Future research should focus on multi-centre validation and advanced image-processing techniques to improve robustness in complex cases.